Documenting machine learning models

Products use machine learning and “artificial intelligence” to do things like recommend a song to listen to, offer a quick response to an email, organize search results, provide a transcript of a meeting, and more. Some products rely on ordinary data analysis to construct insights about things like your business performance in a market, or the conversion rates for your online shopping site.

Unfortunately, the companies providing these products often gloss over the technical details of how these systems work—making it seem like those tools are magic, omniscient, or just plain inscrutable. Algorithms are so common, yet it’s just as common for the logic programmed into the systems to be hand waved away.

As integration of machine learning, artificial intelligence, algorithms, and data analysis becomes standard and expected in software products, internal and external documentation for those systems must also become standard.

As a technical writer, it’s perhaps not surprising that I think documentation is important.

Writing documentation is a way to communicate information about your product—and that information in turn lets others use and understand your product.

Commenting code, providing READMEs, writing how-to guides — all of these forms of documentation help people understand and interpret your code, evaluate your project, and use your product.

The normalization of data-driven software, where machine learning models drive key product functionality, means that folks in operations, procurement, legal, and more departments need to understand the components of the product, how it might interact with their system and users, and what risks the business might face as a result.

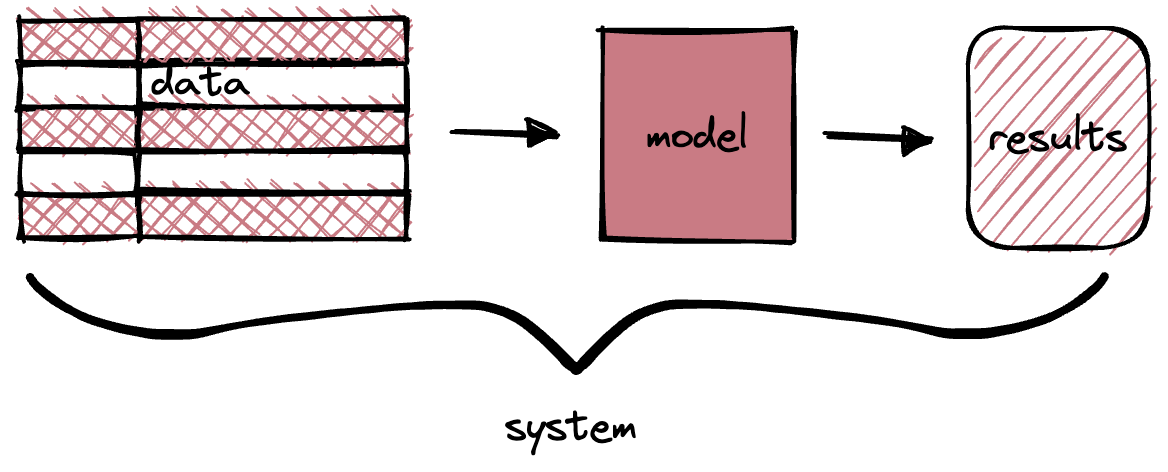

That makes it extremely important to document each component of a data-driven system, from the datasets involved in data analysis and machine learning model training, to the machine learning models and model results, as well as the systems in which the machine learning models exist in.

When evaluating software, product documentation is expected. It would require an immense amount of trust, and likely some promises, to buy or even start using a free software product that lacks documentation. It’s also much faster and easier to start using software if it’s well-documented.

But machine learning models and datasets available on the web are often not well-documented.

Sometimes that can happen because you encounter a machine learning model without realizing it. You’re listening to music and a model is queuing up the next recommended track in Spotify’s autoplay, or you’re browsing the web and you read an article generated by a large language model. Other times, you’re more aware that you’re interacting with a model, such as when you feed a prompt into Midjourney or Stable Diffusion, or ask ChatGPT a question.

Much like Apple might want you to feel when using their products, artificial intelligence aficionados want you to be able to use their trained models without the friction of documentation about how it works.

But documentation can be vital to make AI-based products more useful. For tools based on generative models, like Midjourney or ChatGPT, understanding what led to the output that you see can help you tweak your input to produce more useful results.

This holds true for data analysis and other machine learning implementations as well, such as any data analysis project in an organization, or internally deployed machine learning models supporting business processes and more. Documentation can provide a lot of support.

Randy Au makes the case for documenting a data analysis process in his post Data Science Practice 101: Always Leave An Analysis Paper Trail:

Despite how much busy work it sounds like right now, you need to leave a paper trail that can clearly be traced all the way to raw data. Inevitably, someone will want you to re-run an analysis done 6 months ago so that they can update a report. Or someone will reach out and ask you if your specific definition of “active user” happened to include people who wore green hats. Unless you have a perfect memory, you won’t remember the details and have to go search for the answer.

Analysis deliverables are often separated from the things used to generate it. Results are sent out in slide decks, a dashboard on a TV screen, or a chart pasted into an email, a single slide in a joint presentation for executives, or just random CSV dumps floating around in a file structure somewhere. The coupling between deliverable and source is non-existent unless we deliberately do something about it.

Without a clear definition of how an analysis was performed, how data points were defined, or where a particular chart came from, the results lose value, credibility, and reproducibility. It helps the future version of you, as well as folks that you collaborate with, if you document the context of a project and store that documentation alongside the output of the project.

Similarly, without a clear sense for how a model was trained or why it might be producing specific results, the results lose value and credibility. In an era where generative machine learning models output fabricated academic references when you ask it for citations about a topic, documentation about the systems becomes even more valuable to help you judge which machine learning model output you can trust.

You can more accurately assess if the outcomes delivered by your model are valid, or due to making the error of testing on your training data, if you have clear documentation about how the model was trained and on which datasets.

As Emily Bender points out in the Radical AI podcast episode, The Limitations of ChatGPT with Emily M. Bender and Casey Fiesler:

If you don’t know what’s in the training data, then you’re not positioned to decide if you can safely deploy the thing.

Especially if you’re using a machine learning model in a commercial context, you want to be able to identify possible harm that could arise from the output, such as violent, misogynistic, racist, or other dangerous output. Without information about the training datasets, model training practices, and overall system context for a model, you can’t properly evaluate it.

Provide helpful documentation #

What does it mean to provide helpful documentation for the components of machine learning and data analysis practices?

As with any documentation, you need to consider the following:

Who is the audience? #

The audience of the documentation depends on the context of the analysis or the model. The documentation for a dataset distributed on the web for free on Kaggle has a different audience than a machine learning model deployed internally at a large financial institution to detect fraudulent credit card transactions. As such, the content of your documentation might change accordingly to include more or less detail about specific aspects of the data.

What purpose does the documentation serve? #

Similarly, the purpose of the documentation is different for a publicly available dataset when compared to an internally deployed machine learning model, and is different still from a product that provides a chat interface for a large language model. The documentation for a machine learning model used by a financial services company needs to exist for regulatory and auditing purposes, in addition to the typical purpose of remembering how the model works.

How do you provide the documentation? #

How you provide the documentation also differs depending on what you need to document. You might be able to add inline comments to a dataset, but then you don’t have a good way to provide an overview of the dataset itself. A machine learning model offers no simple way to include documentation as part of the training output. So far, most standards involve providing a PDF with information, but others such as Hugging Face Dataset Cards, implement a YAML-formatted specification file to publish alongside the dataset on the Hugging Face Hub.

What do you put in the documentation? #

When it comes to determining what the documentation should contain, it depends on which component of the machine learning process you’re documenting, and there are a number of standards proposed by academics and prominent players in the data industry.

How to document machine learning components #

If you’re deploying machine learning models in your business operations, disseminating the results of data analysis, or integrating machine learning into your product, you need to write documentation. What you write depends on the part of the system that you choose to document.

There are several perspectives to consider:

- documenting the datasets

- documenting the models

- documenting the model results, or output

- documenting the system

Documenting the datasets #

When you focus on documenting the datasets, you want to capture things like:

- How recently the data was collected

- From where (digitally and geographically)

- How representative the data is against various parameters

- Why the dataset was created

For labeled datasets, you want to consider additional components:

- Which annotation process was used

- How much data was annotated

- Which measures you used to validate the annotations

- Who annotated the data

Standards for documenting datasets:

- Data Cards from Google, which aim to

“provide structured summaries of ML datasets with explanations of processes and rationale that shape the data and describe how the data may be used to train or evaluate models.”

- Datasheets for Datasets from Microsoft, which provides a framework of

“questions about dataset motivation, composition, collection, pre-processing, labeling, intended uses, distribution, and maintenance. Crucially, and unlike other tools for meta-data extraction, datasheets are not automated, but are intended to capture information known only to the dataset creators and often lost or forgotten over time.”

- Data Statements from University of Washington:, which offers

“a characterization of a dataset that provides context to allow developers and users to better understand how experimental results might generalize, how software might be appropriately deployed, and what biases might be reflected in systems built on the software.”

- Data Biography from We All Count, which you can use to record

“a comprehensive background of the conception, birth and life of any dataset … an essential step along the path to equity in data science.”

- Dataset Cards from Hugging Face, which

“help users understand the contents of the dataset and give context for how the dataset should be used.”

- Dataset Nutrition Labels from MIT and Harvard University:, which offers a

“diagnostic framework that lowers the barrier to standardized data analysis by providing a distilled yet comprehensive overview of dataset “ingredients” before AI model development.”

Documenting the models #

In addition to documenting the datasets used for data analysis or machine learning training, you also need to document the models trained on the datasets.

When documenting a machine learning model, you want to capture things like the following:

- What data was used to train the model

- How the model was trained

- How the model features were tuned

- Why the model was created

- How the model output was tested

For versioned machine learning models, it’s also helpful to include context about what is different between one version of the model and the previous, such as:

- Why the new version of the model was created

- What training data is different between this version and the previous version

Standards for documenting machine learning models:

- Model Cards from Google, which

“provide practical information about models’ performance and limitations in order to help developers make better decisions about what models to use for what purpose and how to deploy them responsibly.”

- Data Portraits from Johns Hopkins University, which provide

“artifacts that record training data and allow for downstream inspection”, making it easier for model evaluators to perform tasks like determining if an example output was part of the data input for a model.

- Reward Reports from University of California, Berkeley, which are specific to reinforcement learning models and provide a framework for the intended behavior and reinforcement tactics employed for a given reinforcement learning model.

Documenting the model results #

It’s important to document the results produced by a model in specific scenarios, which can help you debug and retrain the model as needed. Documentation about model results is often referred to as “explainable AI”, as the goal is to explain the outcomes produced by artificial intelligence (one or more machine learning models, or algorithms).

When you document the model results, you want to collect the following information:

- The dataset used to train the machine learning model (and the documentation for the dataset)

- The version of the machine learning model (and the documentation for the model, such as the model features)

- The input into the trained model

- The output of the trained model, in response to the input

- The expected output of the trained model, based on the input

- How close the expected output was to the actual output, based on the input

- Key metrics such as precision, recall, and F1 scores for the model results, especially across different feature segments.

- Some possible reasons about why the model produced that particular output

Standards for documenting machine learning model results:

- Method Cards from Meta, which

“aim to support developers at multiple stages of the model-development process such as training, testing, and debugging” with prescriptive “instructions on how to develop and deploy a solution or how to handle unexpected situations”

- Four Principles of Explainable Artificial Intelligence from NIST, which proposes

“that explainable AI systems deliver accompanying evidence or reasons for outcomes and processes; provide explanations that are understandable to individual users; provide explanations that correctly reflect the system’s process for generating the output; and that a system only operates under conditions for which it was designed and when it reaches sufficient confidence in its output.”

- AI Explainability Toolkit from IBM, which provides an extensible toolkit of options to explain AI depending on the audience of the explanation, such as

“data vs. model, directly interpretable vs. post hoc explanation, local vs. global, static vs. interactive”

- Standard for XAI from IEEE, which

“defines mandatory and optional requirements and constraints that need to be satisfied for an AI method, algorithm, application or system to be recognized as explainable.”

- EUCA: End-User-Centered Explainable AI Framework from Simon Fraser University, which offers

“a prototyping tool to design explainable artificial intelligence for non-technical end-users.”

Documenting the system #

It’s important to document the entire system in which a machine learning model operates. A machine learning model is never implemented as a discrete object. Models must be kept updated to avoid drift and thus are implemented as part of a larger system that can include data quality tools, a testing framework, a build and deploy framework, and even other models, such as in the context of an ensemble model.

Documentation about the entire system offers helpful guidance to folks trying to understand a system so that they can maintain, update, debug, and audit the system, to name a few common tasks.

As such, machine learning system documentation needs to include the following:

- Details about which tools exist in the system

- Details about how updates to the system are performed

- Dependencies within the system, including people

- Data flows within the system

- For models within the system, documentation for those models

Standards for documenting machine learning systems:

- System Cards from Meta, to

“provide insight into an AI system’s underlying architecture and help better explain how the AI operates.”

- FactSheets from IBM, which provide

“a collection of relevant information (facts) about the creation and deployment of an AI model or service. Facts could range from information about the purpose and criticality of the model, measured characteristics of the dataset, model, or service, or actions taken during the creation and deployment process of the model or service.” with the objective of allowing “a consumer of the model to determine if it is appropriate for their situation.”

- DAG Cards from Metaflow, which offer

“a form of documentation encompassing the tenets of a data-centric point of view. We argue that Machine Learning pipelines (rather than models) are the most appropriate level of documentation for many practical use cases, and we share with the community an open implementation to generate cards from code.”

Actually write the documentation #

Making sure the documentation actually gets written is the most important aspect of documenting machine learning components and systems.

You can choose to automate portions of the documentation, identify different points of the model development process where it would be prudent to update part of the template that you choose, or whatever works for your team and your processes. You can also define robust accountability mechanisms like checklists.

If you lack processes and accountability, it’s easy to skip writing documentation. All the standards in the world for documenting data-driven systems don’t matter if the documentation never gets written.

Most fields struggle to incorporate documentation into their processes, and build accountability for making sure it gets written, but fast-moving fields like data science and machine learning that are still formalizing their practices struggle even more.

No matter which method you choose for documenting data-driven systems, you must include writing the documentation in your existing workflows.