Improving documentation findability in an age of low-quality search results

For months, there have been reports on deteriorating search quality 1. As the quality of search results deteriorates, so too does an important factor that makes technical documentation so useful — its findability.

In an era of web-first authoring and product-led growth marketing strategies, organic documentation findability matters. For some software documentation sites, at least 80% of traffic to the site comes from organic search.

You don’t want to abandon all efforts at optimizing for search engine results 2, but it might be time to reduce our reliance on search as the primary front for documentation findability.

If we stop expecting readers to discover documentation on their own using search, how do we as technical writers make sure the information can still be found? What’s next for documentation findability?

Get social: Invest in your community #

A common tactic to improve search result quality is to append “reddit.com” to get higher quality results. The results are higher quality because they’re human-curated and generated, rather than algorithmically surfaced 3.

Reddit is just one site with human-moderated and curated conversations — your community is also having discussions in Discord or Slack communities, in the comment thread of a Substack newsletter, in person at events, and more.

If you support and engage with your community by responding to documentation feedback and requests, your community members show up to these conversations and discussions with awareness of the documentation—not just their most-referenced pages, but recently updated and published ones as well.

Why does this matter? I always say that my “north star metric” of documentation success is “if someone asks a question and a link to the documentation can answer it”.

If you write high quality documentation, and build champions of your product (and your content), those champions bring your content to the product and business problem conversations happening across the community.

That sounds amazing — but how do you make sure those champions find your content?

Beyond the tooltip: Bring the docs to the product #

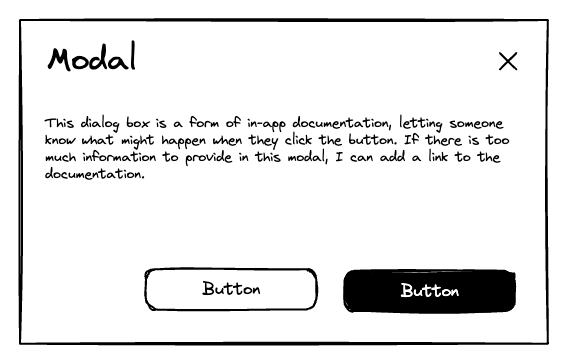

If you document a product with a user interface, you likely have some amount of in-product help. Oftentimes, that can be pretty basic.

For example:

- A tooltip to describe something.

- A “learn more” link in an error message that goes to the documentation.

- A sentence of explanatory text in a dialog box that links to the documentation.

- A link to the documentation.

- A search box in the product that lets you search the documentation.

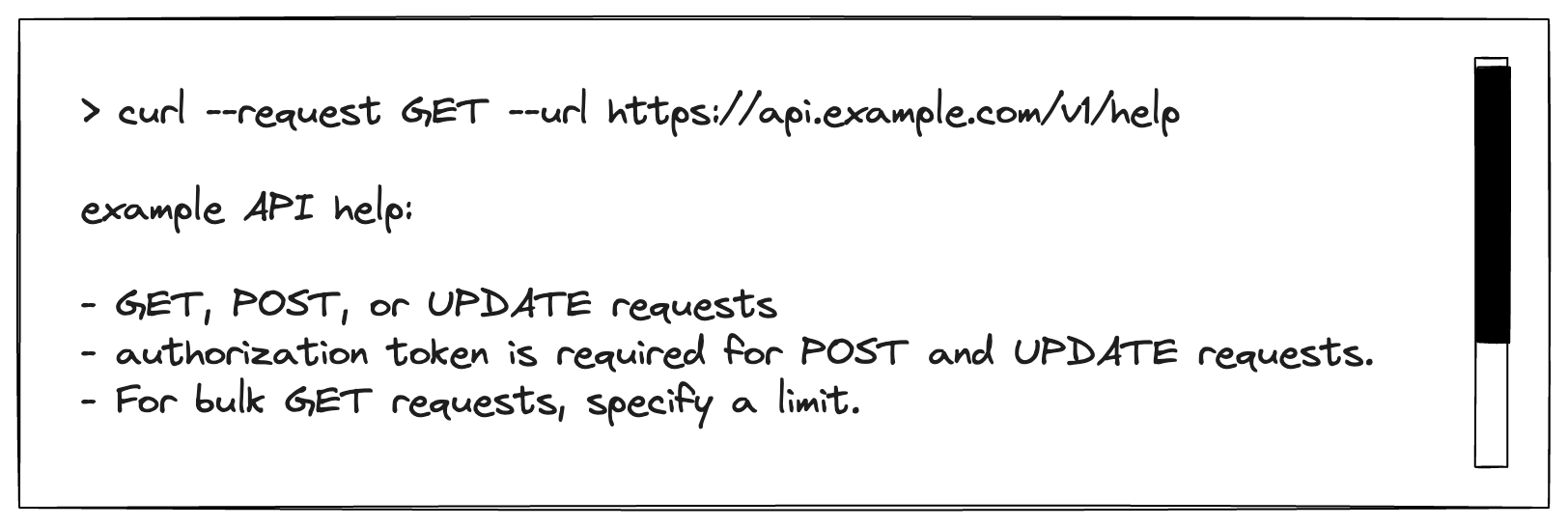

- A

helpcommand in a command-line interface with varying amounts of information available.

As interfaces get more complex and documentation content gets harder to find on the web, it’s beyond time to think beyond the tooltip about other ways to incorporate documentation into the interface in a helpful, maintainable fashion.

We’ve all encountered the overly aggressive product tour when using a new web app, “helpfully” highlighting different parts of the interface the very first time you open it, either distracting you from your intended task (and getting in the way) or showing you about new features when you’re not even sure what your task might be yet (just trying to explore).

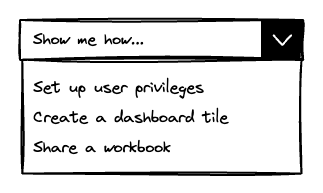

Instead, some other modalities could help:

- Interactive tutorials that use the product to explain it — example code or templates.

- A “show me how” option that demonstrates a discrete task on demand, rather than a tour that appears on first page load.

- Autocomplete for commands with in-line guidance about required arguments and parameters.

- More robust CLI-based assistance.

- An API parameter that provides usage details for the endpoint.

- High quality error messages.

Not all of these modalities are new, but they are still easily neglected!

Having help available in these areas isn’t enough. If your command-line tool has a help interface, does it list the available arguments for a command with no context, or is it descriptive and useful? If your API endpoint returns error messages, how helpful are they in helping the recipient fix the problem?

It’s expensive to create and maintain these content types, but if your documentation team is already integrated with your design and engineering teams and processes (hint), then producing this content extends, rather than transforms the way technical content is produced at your company.

If your documentation team is focused on putting out the latest fire or picking up what was thrown over the wall, you might need to invest more deeply in what content and customer assistance looks like at your company to provide some of these in-product modalities.

The closer your content teams are to the product design and engineering process, the easier it is to enshrine consistent terminology usage and avoid mismatches with mental models.

These modalities aren’t just for “product growth” initiatives—they’re about customer enablement and support, helping customers use and learn more about how to do things with the product they pay for—just like the documentation does.

Embrace AI: Chatbots and more #

In the spirit of meeting your customers (and prospective customers) where they are, you need to embrace AI.

By this, I don’t mean “train a chatbot on your documentation content and make it available to customers”, because that adds a new interface that customers need to discover, learn how to use, and which you then need to maintain 4.

Instead, focus on making your content easily consumable by a language model as training material. That might look like:

- Write succinct and clear content.

- Use consistent terminology.

- Structure your content consistently.

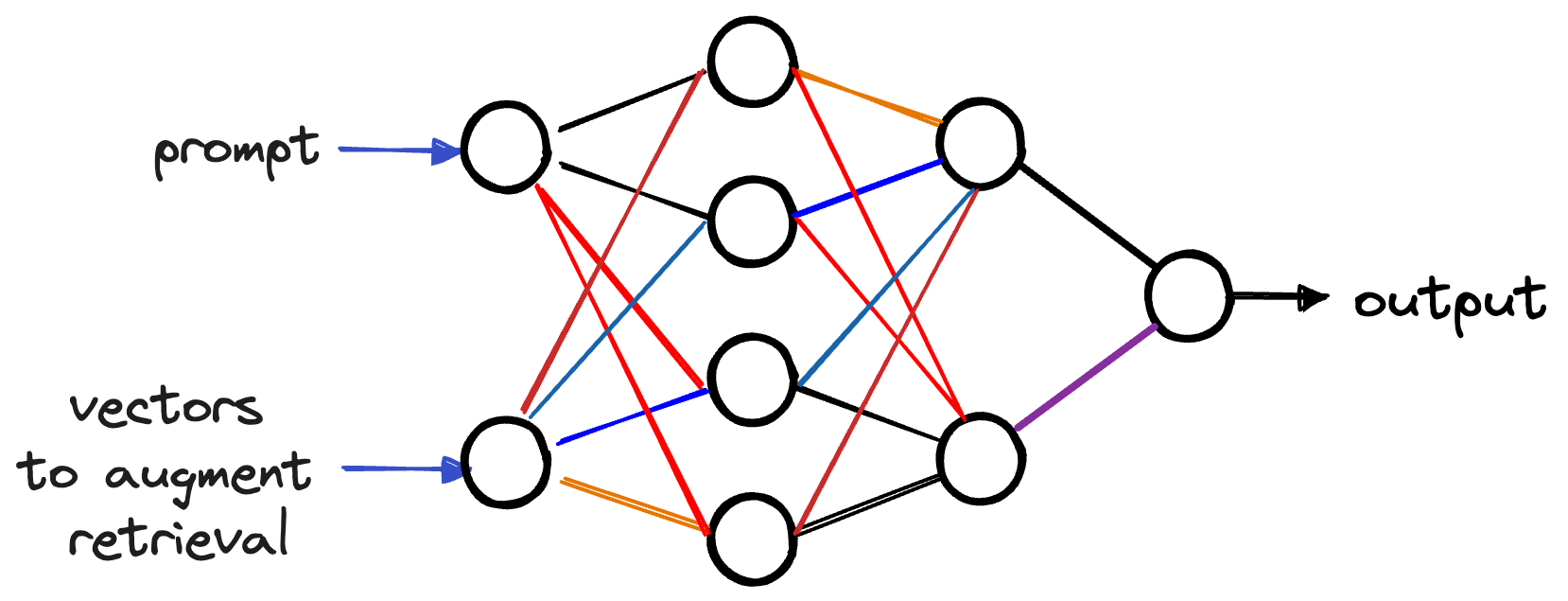

While large language models don’t need to be trained on structured content, the token-based retrieval methods used to generate text rely on vector similarity and associations. If your content contains consistent patterns then it’s likely that the computed vectors for relevant content in your documentation are going to be more accurate.

If your content is used to perform retrieval-augmented generation when prompting a large language model to generate a response, if the chunks created as part of that process all contain relevant information, the results are more likely to be relevant as well 5.

For example, if your content is always structured with this pattern:

Enable updates for your device

To enable updates, you must have admin privileges on your device.

To enable updates, do the following:

- Log in as an administrator.

- Navigate to the system settings.

- Select system > general > updates.

- For enable updates, select the checkbox.

A language model prompted to retrieve information about the permissions required to enable updates can more easily do so than if your content is structured like this:

To adjust system settings, you must be an administrator.

The available system settings for your device include:

- Network

- Audio

- Appearance

- Privacy and Security

- General

- Battery and Power

It’s important to keep your device updated at all times. It protects you from security threats like malware or viruses. You can set this up automatically if you turn on the correct setting. You can also adjust other settings that can help with security and privacy.

You might notice that the less-structured example is also hard to read and confusing. I’m intentionally exaggerating this example, but the more clear and concise the content, the more helpful it is to your readers and to LLM tools performing retrieval-augmented generation.

Improve documentation findability by going beyond the page #

Ultimately, the best way to make your documentation easier to find is to put it where your customers and prospects are looking for helpful information:

- Support your community members sharing links to your documentation.

- Share content inside product interfaces (web and otherwise).

- Implement consistent language and patterns to help readers and LLMs retrieve relevant information.

Take your content beyond the webpage and stay helpful!

-

Reports such as: June 2022, The Open Secret of Google Search by Charlie Warzel for The Atlantic. May 2023, What happens when Google Search doesn’t have the answers? by Nilay Patel for The Verge. June 2023, A storefront for robots by Mia Sato for The Verge. August 2023, Google’s Search Box Changed the Meaning of Information by Elan Ullendorff for WIRED. ↩︎

-

Mostly because the best way to ensure high-quality search results for your documentation is to write high quality documentation that is user-centric and task-oriented, and therefore matches the tasks and keywords that readers are searching to find your content. ↩︎

-

To be pedantic, algorithms of course are involved in who sees what on Reddit, but the responses and posts in any given subreddit, if well-moderated, are from real people. ↩︎

-

If you want to create an LLM-based tool that uses your documentation content, consider how to make it findable. For example, you could create a GPT in the OpenAI marketplace that is enhanced with a vector database full of tokens from your documentation site and can answer questions about your product—and perhaps even perform tasks in your product. You could also enhance autocomplete in your product with documentation content that is retrieved using an LLM integration. Think beyond the chatbot. ↩︎

-

I’m making this assertion based on the blog post RAG Isn’t So Easy: Why LLM Apps are Challenging and How Unstructured Can Help, which is confusing to read because the company publishing it is called Unstructured. In the post, the author demonstrates how chunks created with their tool happen at semantically relevant spots, such as before a heading, thus finding that “The RAG system produces higher fidelity responses with Unstructured chunking because the chunks have more consistent semantic meaning, resulting in more relevant query results.” ↩︎