Developing an AI strategy for documentation

If you find yourself worried about being left behind because you don’t know what to do about AI and technical writing, this blog post is for you.

Lots of people are using AI. As Anil Dash puts it in his blog post I know you don’t want them to want AI, but…:

using AI tools is an incredibly mainstream experience now.

Even if you’re not personally using AI, many readers of the documentation you write likely are, which means your documentation needs to meet them where they are. You need an AI strategy for your documentation.

Why you might need an AI strategy #

Information discovery is changing. When you’re looking for answers to a question you have, you probably do a search on Google. It’s been years since Google launched, so it feels like second nature.

Before search engines existed, people looked up information in other ways — asking librarians, reading encyclopedias, visiting a trusted website (shoutout Encyclopedia Britannica). After search engines launched, people started to look at the search engine results first (thanks, Ask Jeeves). And if the results were useful and relevant (and they often were), people started to trust the results presented by search engines and rely on them when seeking information.

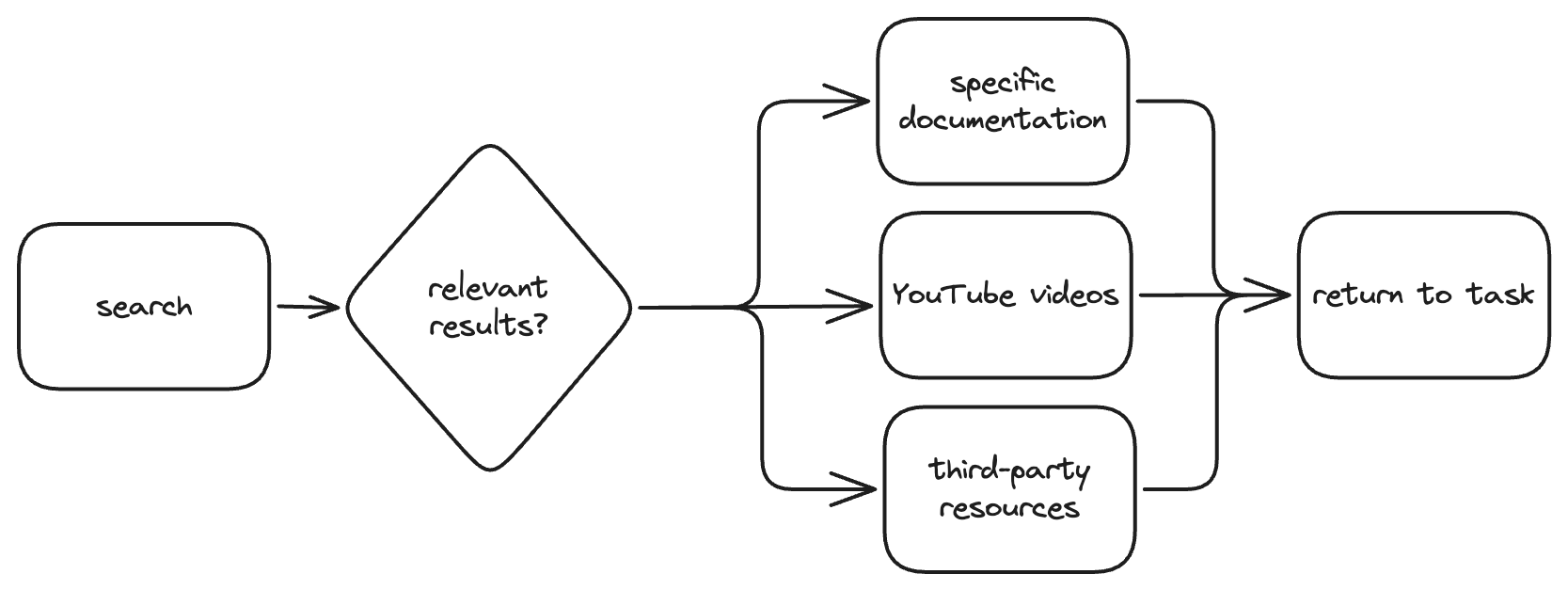

Someone looking for information about your software product can review results containing your documentation, but also third-party resources, and YouTube videos:

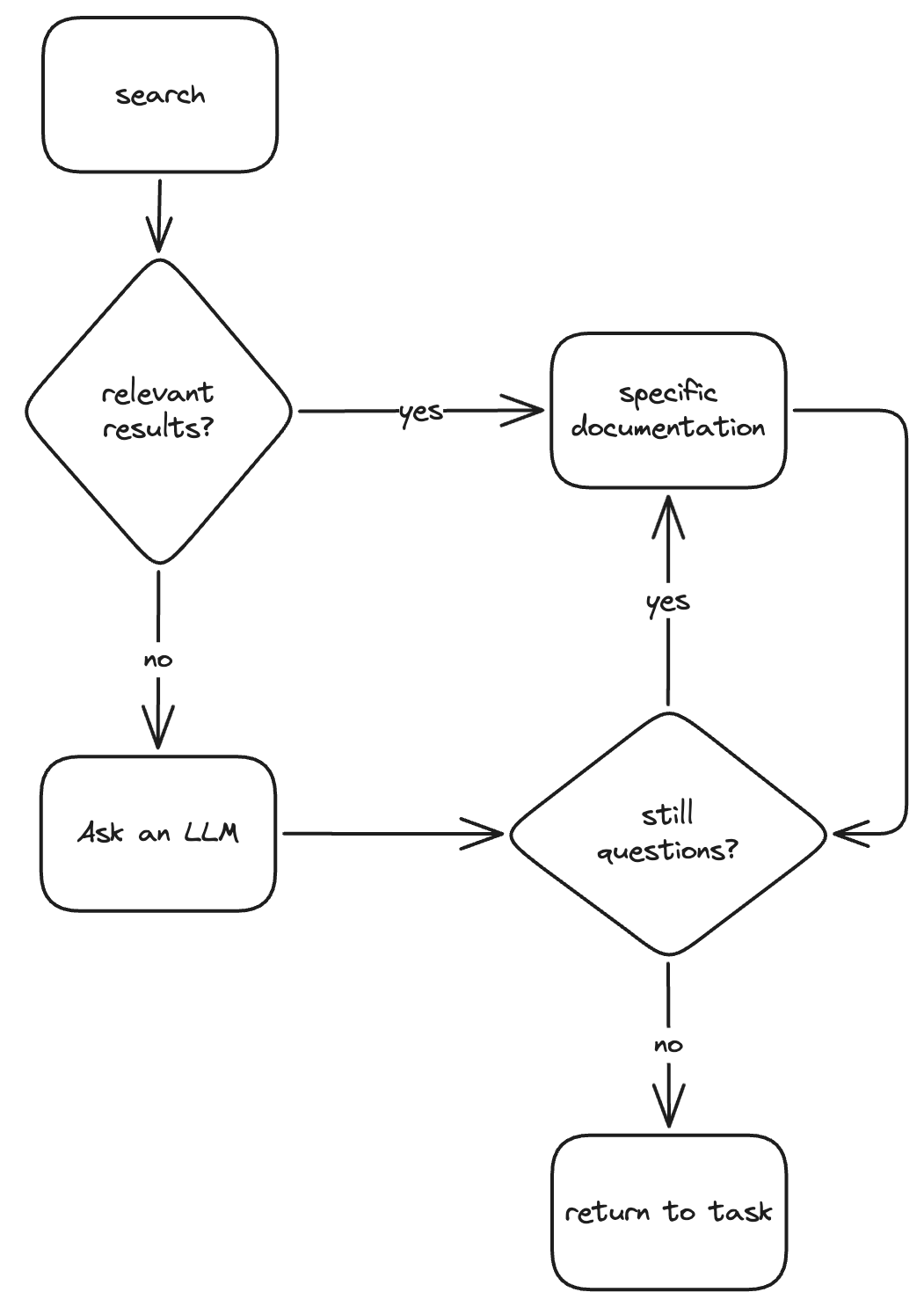

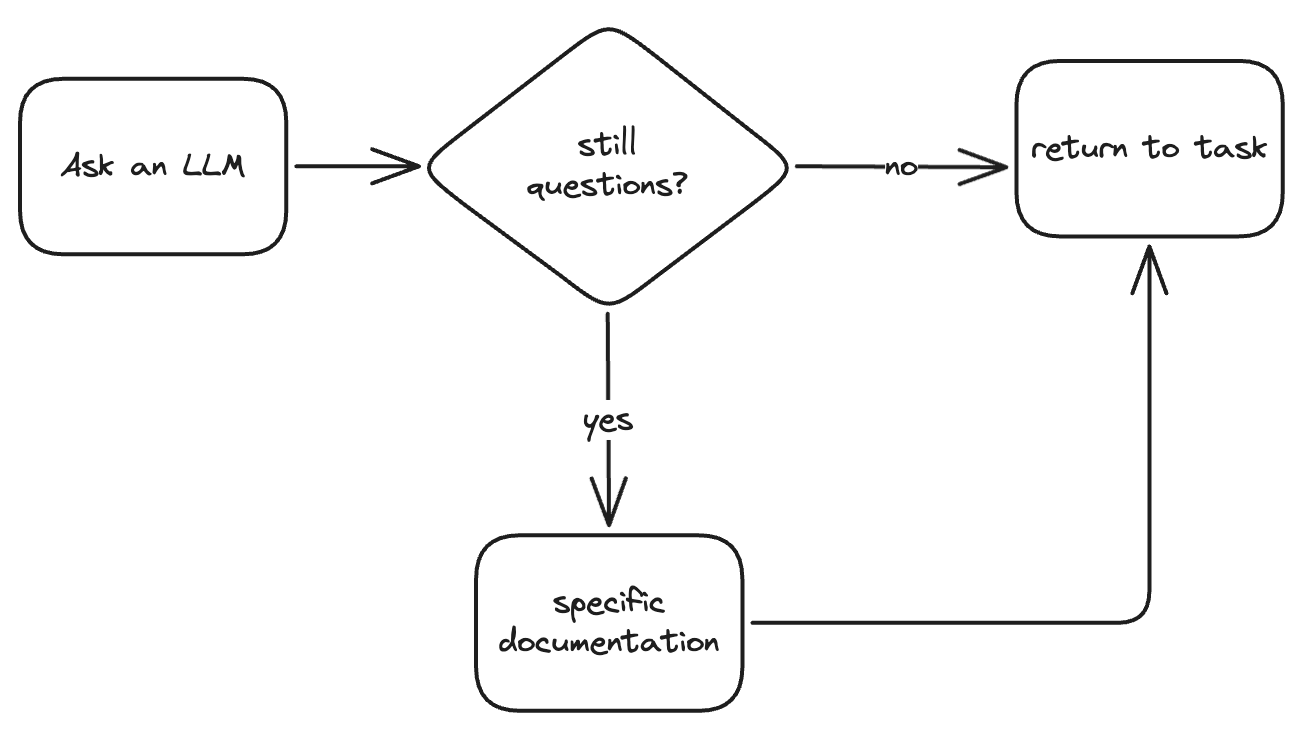

However, with the launch and popularity of ChatGPT, Claude, and other AI-based tools, habits are shifting again for many people. People are experimenting with different ways to seek information. One of those ways is using AI to learn about things:

Over the past few months, I’ve been talking with folks about how they find information and learn about a product. ChatGPT and Claude and Gemini (or at least Google’s “AI overview”) are consistently mentioned as one of the main methods people use. Even if the output isn’t that reliable, visiting an AI tool is still a key part of the information-finding flow.

AI tools are used for all sorts of information-finding flows:

- Answering basic questions about how to accomplish a task.

- Decipher the potential root cause of an error message and provide next steps for resolution.

- Provide solutions to intricate application design questions.

- Suggest syntax for code, a formula, or a SQL statement.

You need an AI strategy for your documentation to enable customers using AI to be more successful when using your product to accomplish their goals.

Define your AI strategy #

The components of an effective AI strategy include the following:

- Develop: Partner with teams building AI into your product.

- Discover: Evaluate and improve your content and content strategy.

- Measure: Measure how people use AI to interact with your documentation.

- Adapt: Explore use cases for AI in your documentation practice.

Partner with teams building AI into your product #

If you partner with teams building AI functionality into your product, you can enrich those product-relevant AI tools with documentation context.

Two common modalities for in-product AI tools are chatbots and agents:

- AI chatbots explaining tasks in the product.

- AI agents Oftentimes, agents have some sort of explanatory component. For example, an AI agent that rewrites documentation markup to fix a syntax error is functioning as an agent, but it might perform tasks as part of a conversational interface like a chatbot. .

For both chatbots and agents, documentation is a critical component to ensuring the success of the functionality. Why? Because documentation provides the authoritative source of instructions for performing tasks successfully with the product.

If an AI agent is provided with instructions for performing tasks, it can be more effective in completing those tasks, which makes the person trying to use the AI agent more efficient and successful at their goal.

For an AI chatbot, an ideal experience can look like what Casey Smith describes in 10x Impact: Inside Payabli’s Documentation Revolution:

Customers can ask questions like “build me a config for this service,” and the chat generates working configurations from our documentation.

The process of providing in-app documentation is a lot less complex when one interface can provide on-demand assistance. Instead of a clunky experience where you’re configuring elaborate mappings between product pages and documentation pages, or attempting to make webpage-sized content coherent in a 100px square popover.

If your customers have an existing pattern of completing tasks that your company is already planning to add AI into, make sure you’re involved so that you can add documentation context.

You can invest in building a chatbot with a tool like Fin, Inkeep, or Kapa. But I don’t recommend placing the chatbot on the documentation site.

When you build your own chatbot and provide it on your docs, you create uncertainty for your customers and readers:

- Is the response going to contain accurate and trustworthy information?

- Is this chatbot going to be more reliable than ChatGPT or Claude?

Documentation is perceived as an authoritative source of information. If the official documentation provides a way to get inaccurate or misleading information, readers might lose trust in the documentation itself, and ask: Are AI chatbots docs? (No.)

When customers get stuck with your product, they often have to leave to get help. The advantage of an AI chatbot is that it’s a simple way to provide (qualified, yet potentially unreliable) assistance with using the product inside the product. People expect a chatbot within the product to have context of the authoritative how-to documentation because it exists within the product itself.

Instead of adding yet another interface or entry point for customers to learn, discover, and interact with, focus on providing assistance with the product inside the product.

As part of your AI strategy, identify if there is a team building AI functionality into your product, and then partner with them. If there isn’t, partner with someone in engineering to explore options or opportunities for adding an in-app documentation chatbot that can provide that on-demand assistance.

Improve your content and content strategy #

Writing high-quality content for humans also helps AI-based tools, but there are still some accommodations that you can make in your content to help AI tools make better sense of it:

- Expose raw source content and programmatic endpoints.

- Provide decision support and user-centric content.

- Write precise and clear content.

For other guidance on writing for LLMs (and humans too), see the following resources:

- The blog posts listed in Optimizing docs for LLMs in the November 2025 Write the Docs newsletter.

- Writing documentation for AI: best practices by Kapa.ai, a tool that provides retrieval-augmented generation (RAG)-based chatbots.

- Optimizing content for Fin by Intercom, who provide a RAG-based chatbot called Fin.

Expose your content to LLMs #

LLMs perform markedly better at answering questions about how your product might work when they have access to the context available on your documentation site.

To help improve the likelihood of high-quality answers from LLMs, implement the following practices:

-

Set up an LLMs.txt file, essentially a sitemap that points to the raw markdown files of your content, which is easier for LLMs to process.

-

Expose a model context protocol (MCP) server for your documentation site to enable AI agents to search and fetch results from your documentation, or even perform interactive tasks in your API documentation.

- For more about what MCP is and how it might help, see The Model Context Protocol (MCP) and its impact on technical documentation from Cherryleaf.

- For even more details, check out the podcast episode MCP servers and the role tech writers can play in shaping AI capabilities and outcomes with Tom Johnson, Fabrizio Ferri Beneditti, and Anandi Knuppel.

Provide decision support and user-centric content #

Customers use your product to accomplish goals they have, like “keep track of my work”. Customers aren’t thinking “today I am going to use a Trello board and cards”.

If you write feature-focused documentation, you need to make sure your content ties together the features in coherent, user-centric content that addresses customer goals.

For example:

| Feature-focused | User-centric |

|---|---|

| Create a card on a Trello board | Track tasks on a Trello board |

Or you can split the difference: Create cards to track tasks on a Trello board.

Your customers might already be asking for this type of content (or silently wishing for it), but the LLM tools also need user-centric content to help answer questions about your product more accurately.

When people look for help, a product feature might be the answer, but it’s not the question. People ask chatbots questions, so your documentation must address the question too. Don’t write FAQs (really, don’t). Instead, write user-centric content that addresses both the question and the answer!

If your content only includes information about creating cards, the LLM might make something up because the context about the user goal (tracking tasks) is missing from the documentation. A human might do a decent job at guessing based on prior mental models of task-tracking software, but Trello isn’t a great example because products that are available directly to consumers have somewhat less of a risk with this because there is a substantial amount of third-party content also being created that might fill that gap for your documentation. .

To alleviate the risk of hallucination, write user-centric content that includes decision support to help address questions like “How can I track tasks in Trello?” without literally writing content titled “How can I track tasks in Trello?”.

Write precise and clear content #

When LLMs process content from a webpage, the content is split into pieces. For a RAG-based chatbot, this processing is referred to as chunking, and LLMs like ChatGPT and Claude can also chunk content to make retrieving context from linked sources more efficient.

Due to this information processing method, you might want to invest more effort on writing precisely (and possibly also update your style guide!). As quoted in the Write the Docs November 2025 newsletter on Optimizing docs for LLMs:

according to a recent Intercom article by Beth-Ann Sher (Optimizing content for Fin) writing “as if you’re doing a radio interview and you don’t want to be quoted out of context” can be more effective than FAQs.

Given that guidance, it’s crucial to avoid language shortcuts like the following:

- Unclear antecedents

- Excessive use of relative pronouns

- Positional or relational language like “below” or “earlier”

Instead, make references explicit. Refer to specific steps by number, titles of tables, or restate the subject instead of using a relative pronoun. Your writing might get more verbose, but it will also get a lot more clear.

For example, given a section of content like:

Before you eat dinner, set up a table for the cheese platter. This helps ensure that people can access the cheese at any time. It should include the following:

- Cheddar

- Emmentaler

- Gouda

For guidance selecting an appropriately sized platter, refer to the steps above.

You might instead rewrite the section of content to read differently:

Before you eat dinner, set up a table for the cheese platter to ensure that anyone can access the cheese at any time. The cheese table should include the following types of cheese:

- Cheddar

- Emmentaler

- Gouda

For guidance selecting an appropriately sized cheese platter, refer to step 2 of this hosting guide, “Select your serving dishes”.

I’ve exaggerated these examples, but as writers, we often take language shortcuts like using relative pronouns or unclear antecedents. By taking the long way and making these references explicit, not only can LLMs more easily process the content, but so can people that process information differently.

For more details about these writing improvements, I recommend the guidance to Be precise in the Splunk Style Guide (which, for the record, predates LLMs).

Measure how people use AI to interact with your documentation #

An important component of your AI strategy is understanding how many people are using AI to learn more about your product and interact with your documentation. For the most part, you can measure it!

In addition to SEO (search engine optimization), web marketing has started to refer to AEO (answer engine optimization) and GEO (generative engine optimization) as new acronyms to care about for measuring and optimizing content for LLMs and AI tools.

The tactics for SEO vs AEO or GEO are similar, but measuring AEO and GEO requires a bit more effort than measuring SEO.

As part of measuring AEO and GEO, I suggest expanding your web analytics monitoring to measure the following for your documentation:

-

Track referrer traffic originating from LLM chatbots, like chatgpt.com or claude.ai.

Don’t consider referrer traffic a complete representation, however, because tracking only referrers is inherently incomplete due to limitations with how different sites and browsers handle referrer traffic.

-

Identify AI-attributable traffic in the user agent strings, such as traffic from AI crawling bots or attributable to an MCP server enabled for your documentation.

For a list of known user agents, see Major AI Bot User-Agents on llmsCentral, or AI user-agents, bots, and crawlers to watch from Momentic Marketing.

If you have server-level website monitoring, you can also track more data in request headers, looking for the Signature-Agent field in traffic for your site to identify traffic from AI agents. Read more in Simon Willison’s post ChatGPT agent’s user-agent.

Evaluate the performance of AI tools when answering questions #

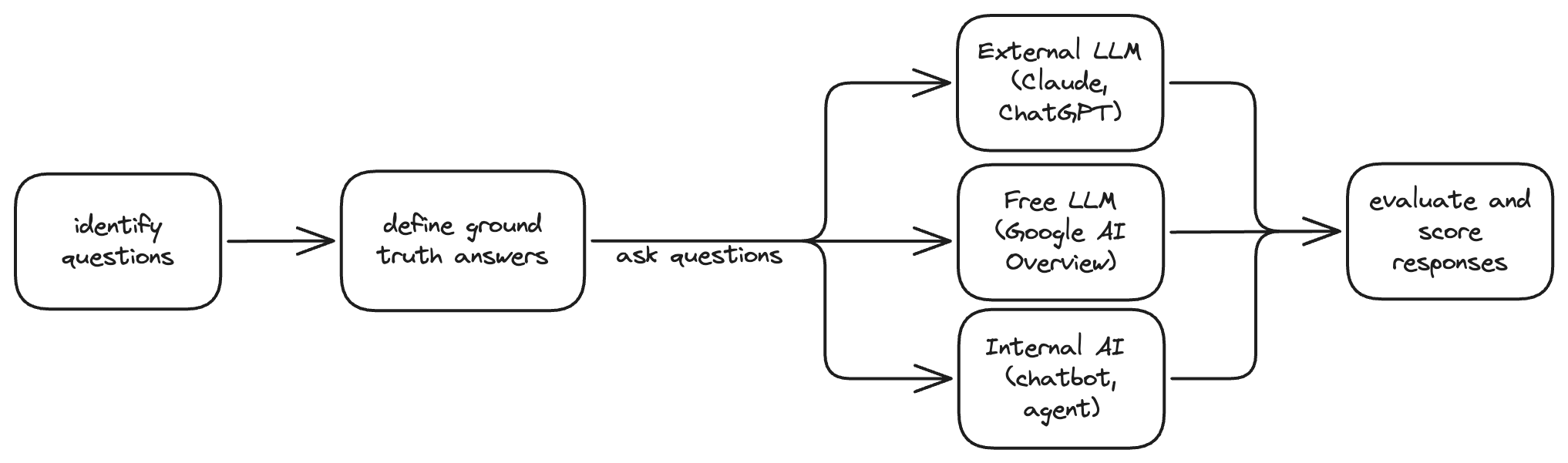

Evaluating the performance of AI tools for answering questions is also This level of evaluation is extremely time-consuming and resource-intensive, so if you’re only planning on implementing a retrieval-augmented-generation (RAG)-based documentation chatbot, evaluating LLM performance at this scale is likely excessive. . However, this is another case where I’d recommend partnering with any teams working internally on AI functionality to ensure that you aren’t duplicating efforts.

When evaluating the performance of AI tools, you can pay for large-scale QA through tools like Profound or Amplitude, or perform and track evaluation suites that you develop with tools like Gauge or Langfuse.

If you perform manual QA, devise a set of high-value questions to evaluate, choosing from the questions that customers most frequently ask support. If that data isn’t available to you, consider the following sources:

- Common information that customers search for, guided by particularly long queries typed into search.

- High-stakes questions that are important to get right about how your product works.

- Comments that people leave in page quality feedback responses for the documentation site.

After you identify the set of questions, define a high quality “ground truth” answer for each question to measure the performance of the LLM-based tools against.

After you have the questions and the answers, ask the questions, record the responses, and score the accuracy of the results against the ground truth answer.

Performing evaluations like this over time helps you monitor the quality of results that customers encounter when looking for information.

If you plan to make changes to your content to help LLMs, perform these evaluations before making those changes to develop a Performance and quality of LLM-based tools is somewhat inconsistent due to the changes in model performance, system prompts in use, and other factors. Therefore, a wobbly baseline is all that I think you can expect. for your product and documentation performance.

Then, after making changes, repeat the evaluation after some time has passed. I recommend waiting at least a week, or even up to a month, to test changes in external systems. With any luck, the external AI tools will be more successful at providing more reliable or higher-quality responses for the questions being asked when compared to your wobbly baseline.

If you specifically want to evaluate how well your documentation is optimized for an LLM, you can try asking the AI tool directly, as Casey Smith suggests in AI chat as user research when your reader is a bot:

Running content through an AI chat to ask what it thinks is one of the greatest uses for AI as a technical writer. I’m thinking of it as user research, only my user is an LLM with a chat interface. With the adoption of AI chatbots, it makes sense to include them in our content testing and review plans, right?

The important distinction here is that you’re not replacing user testing with a chatbot, but instead testing the success of a different kind of documentation user — the LLM-based chatbot. This sort of testing is one way to evaluate how effectively an LLM-based tool can retrieve relevant content from specific pages of your documentation when directly prompted to do so.

Explore use cases for AI in your documentation practice #

Many teams at software companies are being required to use AI. If you’re not yet in that situation, you might be wondering how long you have until you’re asked about using AI in your work.

If and when your leadership team asks you what your plans are for AI content creation, you want to be prepared. Compile a list of what you might use AI to accomplish in your work.

A list of use cases makes it clear that you’re thinking about AI, and that you’re thinking beyond the most basic consideration for using LLMs in technical documentation:

---

config:

theme: 'base'

themeVariables:

primaryColor: '#a32fab9f'

lineColor: '#a32fab9f'

---

graph LR

A[AI can generate content] --> B[Your job is to generate content]

B --> C[AI can do your job]

As Fabrizio Ferri Benedetti breaks down in his excellent post Code wikis are documentation theater as a service, generating content from code is not an effective use case for AI in technical writing, so think beyond that.

You want to be strategic and consider where AI might add value to your documentation process. What are the chores and tasks that you don’t like to perform, or that you haven’t found time to do because it would require too much expertise that you don’t have at the moment?

For each use case on your list, consider how you can test the output and how you will keep a human in the loop to ensure quality output. How do you know that the AI is performing better than a person might? Can you measure the effect of using the tool on your productivity?

Some example use cases that might fit on your list of AI use cases for technical documentation:

- Extend your static site generator to include a custom theme for admonitions using CSS written by an AI assistant.

- Draft a template for new documentation pages to simplify contributions.

- Develop style guide linting rules in Vale based on your current style guide.

- Use an AI-based code reviewer like CodeRabbit to review PRs for style and valid syntax.

- Write alt text for images that humans can review for usefulness and accuracy.

- Convert a screenshot of a diagram into a mermaid diagram.

- Generate sample data for an interactive tutorial.

- Write a script to auto-generate reference content from code.

- Analyze the metadata of your documentation pages to build a knowledge graph.

The Write the Docs newsletter from July 2025 includes some additional use cases. See How documentarians use AI (or LLMs).

Leadership might not know the intricacies of your job, but you can demonstrate both your ~ growth mindset ~ and your expertise by learning about the tools available to you and Spoilers: You don’t have to actually use them! .

Implement your AI strategy #

AI is changing the way people discover and learn. As a result, to grow and learn as a technical writer, you need to know how to deliver content to those people.

Developing an effective AI strategy for your documentation workflow doesn’t mean adopting everything I’ve described here. Pick and choose what makes sense for your organization, personal bandwidth, or team maturity.

- Partner with teams building AI agents and chatbots in your product

- Make your content easily available to AI chatbots and agents

- Deliver conceptual decision support content

- Write precise and clear content

- Measure AI-driven web traffic

- Evaluate LLM performance for specific product questions

- Explore AI use cases for your own workflows

No matter how tempting it might be, try not to ignore the existence of AI entirely. Defining your own AI strategy is both a proactive and defensive endeavor — learn about a new technology, and defend your expertise with that knowledge.